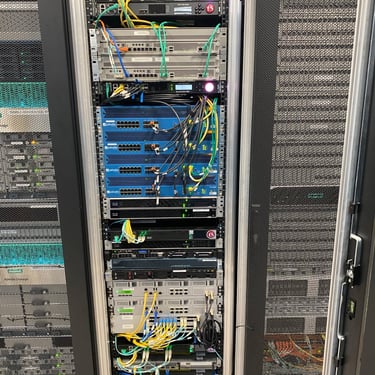

I began working with IT infrastructure early in my career. I was a network administrator and spent my time updating port vlan assignments, replacing dead switches, upgrading firmware, and studying for the next certification exam. When the word came down that we were about to start building a datacenter and several new server rooms I was up for the challenge. We were an IT team of eleven then. Volunteers were not turned away.

I define infrastructure as all of the components required to house, support, connect, and secure large-scale computing enviroments. This includes electrical and backup power systems, cooling systems, environmental monitoring systems, and security systems. It also includes equipment racks, hardware, and copper and fiber optic cabling.

I learned infrastructure quickly and enjoyed the work. It's precision work that requires focus and attention to detail. Critical systems must be redundant, load balanced, fault-tolerant, routinely tested, well documented, and monitored closely so that any deviation outside of s prescribed threshold will notify the appropriate person, team, or on-call engineer.

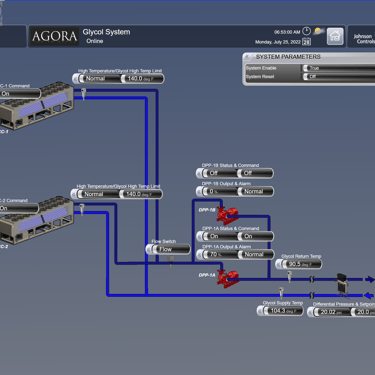

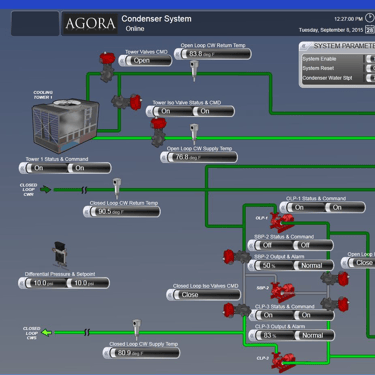

Infrastructure includes generators and load banks, transfer switches, breaker panels and transformers, UPSs and PDUs. It's pumps, drive controllers and valves, heat exchangers and cooling towers, humidifyers and A/C units. A lot of this equipment can be controlled and monitored over the network using remote management cards. However, if it's on the network it's going to need quarterly maintenance. Firmware upgrades, security patching and configuration audits, and password rotations just to name a few.

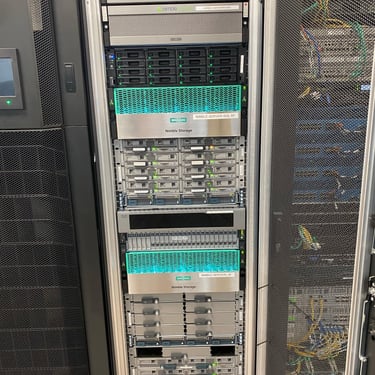

Then there's the IT equipment. Infrastructure engineers must know about network, compute and storage systems, and every flavor of vendor appliance. Understand their connectivity requirements, power draw and heat load. You'll need to know that amperage draw will spike and risk tripping breakers if an entire rack of ESXi hosts or UCS blade servers power on all at once. How heat generation increases with server workload. What about Nimble Storage controller failover behavior? Single-mode vs. multi-mode? SFP transcievers or Twinax? UCS Fabric Interconnect or Nexus FEX? This is all infrastructure.

I routinely tested the systems in my datacenters. Fun fact, in the event of a catastrophic cooling failure (or triggered safety-override mechanism) I had twelve minutes to get someone on site to open the hot aisle containment doors and vent the heat from the room. After twelve minutes, all of the servers enter self-preservation mode and begin shutting down. Consider that your A/C units are glycol liquid cooled. A pinhole leak in a condenser coil, or a drip at the high-pressure supply-side fitting, or backed up condensate drain line and now you have a flood which triggers aformentioned safety-override. There is simply no room for error in infrastructure.

Datacenter Systems and Infrastructure

Datacenter Systems and Infrastructure